The Data Sharing Project — improving student services and outcomes through data and continuous improvement support.

The Data Sharing Project provides many services to our partners. These supports range from continuous and quality improvement, research, evaluation, technical assistance, data education, case management, as well as Covid-19 data supports and resources.

Over time, we plan to highlight all of the ways we support our partners, but today, we want to highlight our evaluation approach and the framework we use to guide work with our partners.

So, what is evaluation anyway?

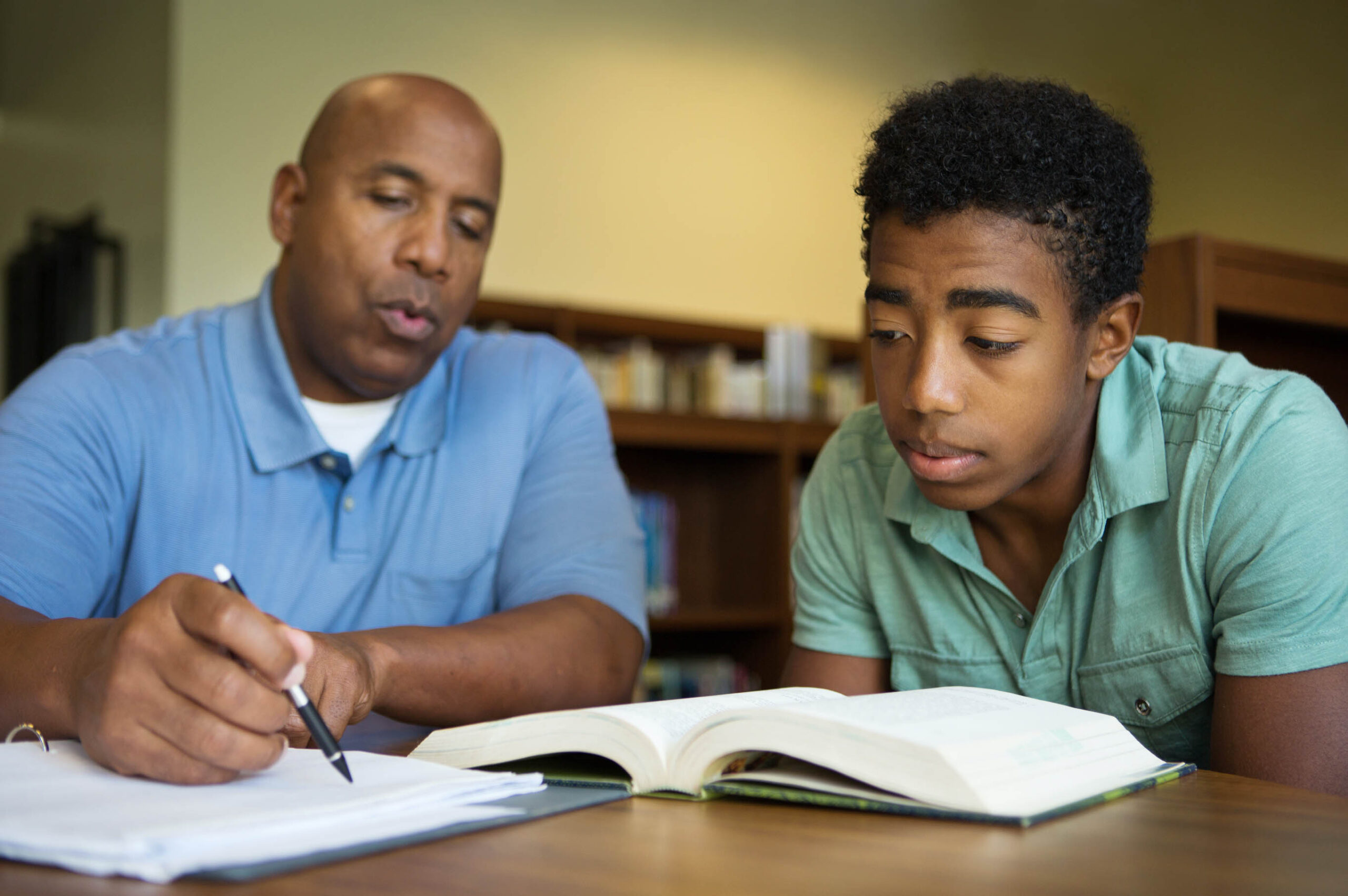

Evaluation is defined as the systematic investigation of the quality of programs for the purpose of organizational development and capacity building, which leads to improvements and accountability for programs, while also providing organizational and social value (Yarbrough, Sholha, Hopson, & Caruthers, 2011). To many, evaluation is a scary word. It strikes fear into even the most well-constructed and time-tested programs. This is because evaluation is often a tool that is tied to program funding or reporting, and isn’t often done outside of that space — sometimes due to capacity, but often because evaluation is an expensive tool that many programs cannot afford outside of required items. Through our partnerships, The Data Sharing Project aims to fill that gap and provide our partners with what they need to improve their programs by asking deep questions, rooted in research, with the goal of improving student outcomes over time.

Within the Data Sharing Project, we approach Evaluation as a collaborative activity where participants are encouraged to explore how they can further improve their existing programming in a safe and judgment-free environment. This starts with trust, a willingness to improve, and hard work. As we collaborate with our partners, we follow their lead and focus on answering questions they want or need to answer. We not only focus on program impact but look at the inner-workings of the program activities and structures to better understand exactly how they are impacting students. Through the partnership, we examine outcomes, give context to the data we are sharing through the project, and provide actionable recommendations based on the findings.

Our evaluation team also approaches this work as educators. We have a full-time evaluator mapping the course from cycle to cycle, and a data analyst who provides needed data and reporting. As we move through cycles with agencies, we break down what is being done, talk through the process, and provide them with skills they need to think about the work they are doing through a continuous and quality improvement lense. Not only is this great learning for agencies, it keeps the continuous improvement work top of mind and leads to the utilization of the evaluation results.

The Data Sharing Project Framework

Mapping out the evaluation process is very important. Through the DSP, we utilize an implementation framework to guide our evaluation and continuous improvement work (see below). Having an implementation framework is important as it provides a conceptual guide to the utilization of effective implementation practices. Additionally, it helps to differentiate the stages of implementation that may occur at the beginning of a program versus the practice of a well-established program. In doing so, there is a linear approach of the implementation framework as well as opportunity for feedback loops based on data-driven decisions that helps to improve practices over time.

Data Sharing Project Evalutation Framework

Exploration

- Needs assessment

- Focus groups

- Examine services/intervention components

- Identify implementers/service providers/staff

- Assess fit/leadership participation

- Consider implementation drivers

Installation

- Prepare organization for next steps

- Prepare staff for service changes

- Ensure resources are available

- Prepare implementation drivers

- Create improvement cycles

Initial Implementation

- Deploy data systems – reporting

- Adjust implementation drivers as needed

- Manage change (turnover, programs, enrollment)

- Initiate improvement cycles

Full Implementation

- Deploy data systems — evaluation

- Monitor and manage implementation drivers

- Achieve fidelity and outcome benchmarks

- Further improve fidelity and outcomes

Two to Four Years

Note. Hargrave (2021). Adapted from The National Implementation Research Network (NIRN) at The University of North Carolina at Chapel Hill’s FPG Child Development Institute, 2013.

Implementation science is a framework that focuses on the process of implementing evidence-based programs and practices. The purpose of implementation is not to validate evidence-based programs, but to focus on having effective implementation that bridges the gap between science and practice. Developing an effective intervention is useful, but ensuring the programs can be maintained is most important. Therefore, it is vital to understand how and if the programs are successfully implemented, which is done over phases. Each implementation phase is examined to assess outcomes.

The 4 stages of implementation that the DSP focuses on are a part of the Make it Happen framework, which includes exploration, installation, initial implementation, and full implementation (Fixsen, Naoom, Blasé, Friedman, & Wallace, 2005).

The exploration stage is designed to identify the need for the program or intervention, assess the programs fit with the community’s needs, and prepare the organization by ensuring there is information and support. During the installation stage the program prepares for implementing new practices that were created in the improvement cycle and ensures resources are available. Initial implementation is when changes begin to happen on all levels as the improvement cycle is implemented, data systems are provided, and necessary adjustments are made. In the full implementation stage, new practices are fully implemented, evaluations are conducted, and outcomes and accuracy are assessed.

This process typically takes two to four years to get to full implementation. However, the evaluation process is ongoing and always evolving as programs evolve.

We could never do this work without our amazing Data Sharing Project partners. The agencies we work with are part of this work because they want to know how they are impacting students, and shift their work to align with current goals, or if outcomes are not quite what they expected. Through this project we aim to change the way Evaluation is perceived. We hope people will stop seeing evaluation as a scary concept and really appreciate the power it brings into continuous and quality improvement. It’s about learning, and growing, and sharing best practices so other programs can benefit from collaborative learning.

Learn More

Have Questions about this Blog? Interested in learning more about what we do? Contact Christina Spence at Chspence@wsfcs.k12.nc.us with any questions.